Don't Judge Me...

How I Used Claude and the Real Housewives

to Learn How to Program

Let me set the scene.

It's late. I'm supposed to be writing something important. Instead, I'm watching Porsha drag Kenya Moore across my screen, not on Bravo, but inside an interactive network visualization I built myself. In my browser. Using actual code.

I know. I know.

But hear me out, because what happened between me and a D3.js network graph and the entire Real Housewives franchise might just change how you think about learning to program.

I've Been "About to Learn to Code" for Years

I have a Ph.D. I teach faculty how to design courses. I study how people learn. And for years (embarrassingly many years) I could not make programming stick.

I took Python. I understood it in the moment. Two weeks later? Gone. I took a JavaScript tutorial. Same story. I'd get excited, do a few lessons, and then life would intervene and I'd lose the thread entirely.

The problem wasn't the content. It was that I had no reason to keep going. No project. No stakes. No drama.

Enter: Claude.

The Conversation That Started Everything

I was sitting in on a meeting with a colleague (a genuinely brilliant person who builds interactive educational tools for fun) and he was moving fast. He showed me a network visualization he'd built using something called D3.js and an AI coding tool called Codex. Characters from Game of Thrones were bouncing around the screen, connected by lines showing their relationships. You could drag them. Zoom in. Explore.

It was maybe 300 lines of code. He built it by describing what he wanted in plain English and letting the AI write it.

I thought: I could do that. But with something I actually care about.

And what do I care about? Among other things: the Real Housewives of Atlanta, New York, Beverly Hills, New Jersey, and Potomac. Don't @ me.

What I Actually Did (It's Simpler Than You Think)

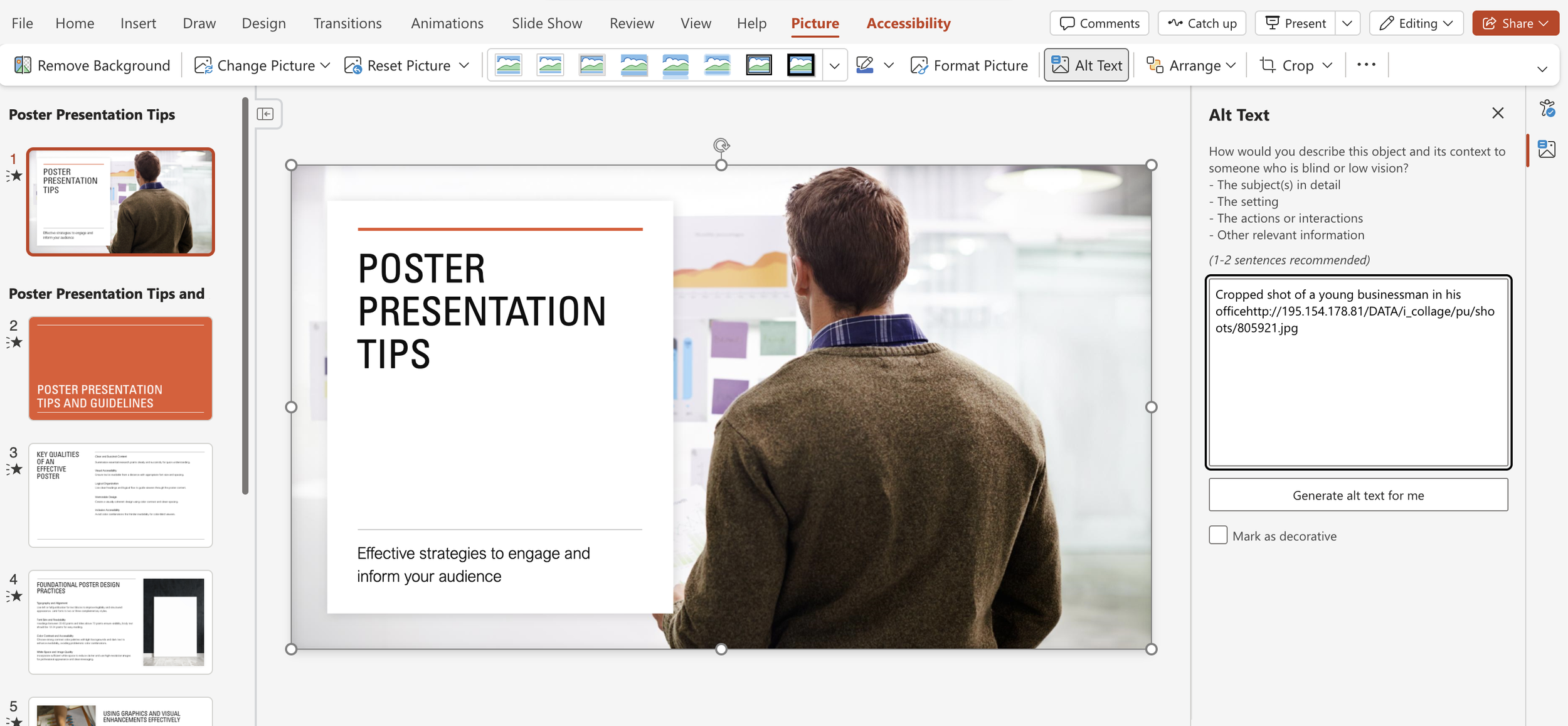

I opened Claude and typed, almost verbatim, this prompt:

"Create a simple D3.js network visualization as a single HTML file. Show connections between Real Housewives. I want to be able to drag the nodes around."

That's it. That was the whole prompt.

What came back was a single HTML file with NeNe, Kenya, Porsha, Bethenny, Teresa, and fifteen of their castmates mapped out in a glowing, color-coded, draggable constellation. Each franchise had its own color. Each relationship (friendship, feud, alliance, family, frenemy) had its own line style. Hover over a node and you get the tea. Literally. A little bio pops up.

I double-clicked the file. It opened in my browser. And I sat there for a solid minute just dragging housewives around my screen like a woman who had finally arrived.

But Here's the Part That Actually Made Me Learn

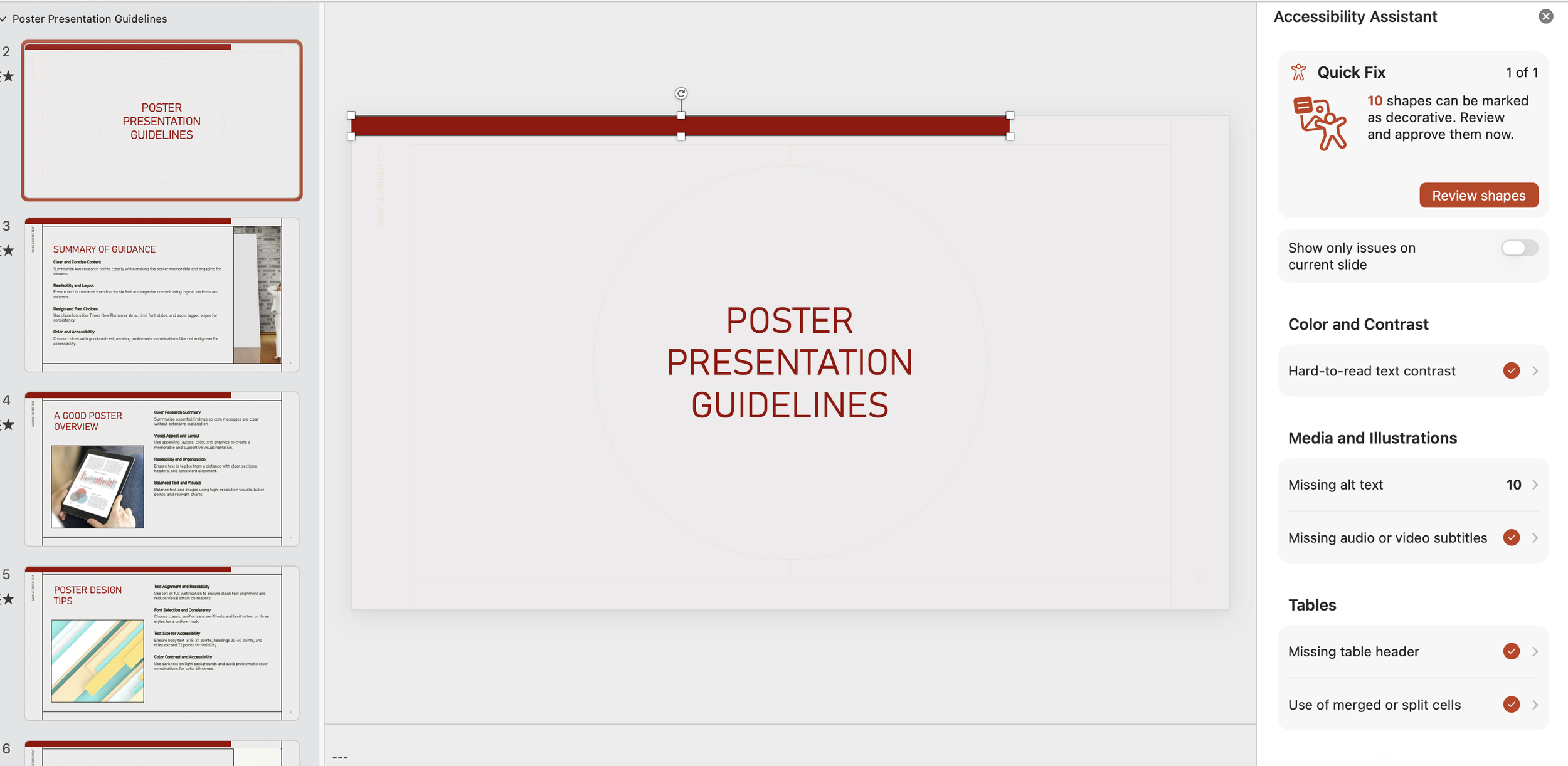

I didn't stop at "ooh, pretty." I started asking questions. Which part of this code is actually about the Housewives, and which part is the instructions for drawing it?

Claude showed me. The answer was two sections: the cast list (readable by any human: you could add a new housewife just by copying a line) and the drama list (relationships, who connects to who). Everything else (the bouncing physics, the color gradients, the hover effects) was D3.js doing the heavy lifting.

Once I understood that, I started poking around. I changed a color. I added a housewife. I broke something, showed Claude the error, and it fixed it while explaining what went wrong. Then I tried to fix the next thing myself before asking.

What I Actually Learned (For Real This Time)

- What a JavaScript library is and why D3.js exists (it's a pre-built toolbox so you don't have to write the physics of bouncing dots from scratch)

- What nodes and links are in network visualization (dots = things, lines = relationships, that's it)

- How an HTML file can contain CSS, HTML, and JavaScript all in one place

- What it means to declare a variable and store data in it

- Why GitHub Pages lets you turn a file on your computer into a real website anyone can visit

None of this came from a tutorial. It came from having a specific thing I wanted, building it, and then being curious about how it worked.

The Bigger Thing I'm Sitting With

I study learning. I've spent 15+ years thinking about how people acquire knowledge, what makes it stick, what makes it fall off. And I have to be honest with myself: the approach I just described (interest-driven, project-based, AI-assisted) is exactly what the research says works best.

It's just-in-time learning. You need to know something, so you learn it. You have a reason to remember it because it's connected to something you made.

What AI tools like Claude have changed is the barrier to entry. That's not cheating. That's smart instructional design.

Should You Try This?

Yes. Especially if you've been "about to learn to code" for a while.

Think of something you actually care about (a TV show, a research interest, your family tree, your favorite athletes) and ask Claude to build a network visualization of it. Drag the nodes around for a minute. Then ask: "Which part of this code is actually my data?" That question will take you further than any tutorial I've tried.

And Andy Cohen, if you're reading this... can we talk about a collaboration? I'm thinking interactive franchise maps, relationship timelines, reunion drama visualized in real time. I have the vision. I have the AI. I have the love for the franchise.

I'm just not built to be a Housewife myself. The taglines alone would stress me out.